We all know and love OpenSSH’s scriptability. For example:

# Burn file.iso from 'host' locally without using disk space

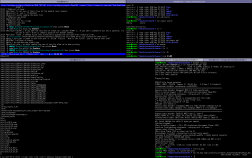

ssh host cat file.iso | cdrecord driveropts=burnfree speed=4 -

# Create a uptime high score list

for host in hostone hosttwo hostthree hostfour

do

echo "$(ssh -o BatchMode=yes $host "cut -d\ -f 1 /proc/uptime" \

|| echo "0 host is unavailable: ") $host"

done | sort -rn

The former is something you’d just do from your own box, since you need to be physically present to insert the CD anyways. But what if you want to automate the latter—commands that repetedly poll or invoke something—from a potentially untrustworthy box?

Preferably, you’d use something other than ssh. Perhaps an entry in inetd that invokes the app, or maybe a cgi script (potentially over SSL and with password protection). But let’s say that for whichever reason (firewalls, available utilities, application interfaces) that you do want to use ssh.

In those cases, you won’t be there to type in a password or unlock your ssh keys, and you don’t want someone to just copy the passwordless key and run their own commands.

OpenSSH has a lot of nice features, and some of them relate to limiting what a user can do with a key. If you generate a passwordless key pair with ssh-keygen, you can add the following to .ssh/authorized_keys:

command="uptime" ssh-rsa AAAASsd+olg4(rest of public key follows)

Select the key to use with ssh -i key .... This will make sure that anyone authenticated with this key pair will only be able to run “uptime” and not any other commands (including scp/sftp). This seems clever enough, but we’re not entirely out of the woods yet. SSH supports more than running commands.

Someone might use your key to forward spam via local port forwarding, or they could open a bunch of ports on your remote host and spoof services with remote port forwarding.

Some less well documented authorized_keys options will help:

#This is really just one line: command="uptime", from="192.168.1.*", no-port-forwarding, no-x11-forwarding, no-pty ssh-rsa AAAASsd+olg4(rest of public key follows)

Now we’ve disabled port forwarding including socks, x11 forwarding (shouldn’t matter, but hey), PTY allocation (due to DoS). And for laughs, we’ve limited the allowed clients to a subnet of IPs.

Clients can still hammer the service, and depending on the command, that could cause DoS. However, we’ve drastically reduced the risks of handing out copies of the key.